Changelog

We're constantly working on new features and improvements. Here's what's new with Langdock.

Claude Sonnet 4.6

Anthropic’s Claude Sonnet 4.6 is now available in Langdock! 🚀

Today, we are adding Claude Sonnet 4.6 to Langdock. It's the most capable Sonnet model yet and brings improved instruction following, consistency, and coding skills.

You can read more about the launch in Anthropic’s official announcement.

Like its predecessor, Sonnet 4.5, the model is available in two versions: one with reasoning and one without.

The model is hosted in the EU and available to all workspaces now.

To get you started quickly, we have automatically enabled Sonnet 4.6 and Sonnet 4.6 Reasoning models in all workspaces where Sonnet 4.5 was previously activated. Admins can manage model access in workspace settings.

Subagents

We are excited to introduce Subagents, a powerful new capability that allows you to embed Agents inside other Agents to solve complex, multi-domain tasks! 🚀

Until now, each Agent worked on its own. With Subagents, a parent Agent can call on its embedded agents mid-conversation to handle specific parts of a task. A contract review can pull in both your Legal and Finance Agents. A customer onboarding flow can involve Sales, Product, and HR, all automatically, in one chat.

Why we built this

As teams have built more specialized Agents, a clear pattern emerged: the most valuable tasks often require expertise from more than one domain. A contract review might need both your Legal Agent and your Finance Agent. A customer onboarding flow might involve your Sales Agent, your Product Agent, and your HR Agent. Asking users to juggle these manually defeats the purpose of automation.

Subagents solves this by letting you nest Agents together so they can delegate tasks to each other within a single conversation. The result is a more connected, intelligent system where your best Agents work together - just like your best teams do.

When to use it

Subagents are especially useful when:

- Your Agent's instructions are getting too long. Split responsibilities across focused subagents instead of cramming everything into one.

- A task spans multiple domains. For example, a contract review that needs both Legal and Finance expertise, or a hiring workflow that involves HR and the hiring manager's team.

- You already have well-built Agents. Reuse them as subagents without duplicating setup or maintaining two versions of the same logic.

Good to know

Delegation happens automatically - the parent Agent decides when to call a subagent based on the conversation. Action approvals still apply: if a subagent needs to send an email or update a record, you'll be asked to approve first.

Only editors and owners of an Agent can add subagents to it. Nesting goes one level deep, meaning a subagent can't call other subagents. Subagents run independently and don't see the parent Agent's conversation history.

Request Access to Agents

We've also added the ability for users to request access to Agents they don't have permission to use. This comes up in two places:

- Access denied screen: If you try to open an Agent you don't have access to, you can now request access directly from that screen.

- During a conversation: If the parent Agent tries to call a subagent you don't have access to, the delegation pauses, and you can request access right from the chat.

Agent owners can review and approve or deny requests in the Agent's sharing settings, keeping governance clean without slowing anyone down.

We can't wait to see what your Agents build together! 🙌

Claude Opus 4.6

Anthropic’s Claude Opus 4.6 is now available in Langdock! 🚀

As of today, we are adding Claude Opus 4.6 to Langdock. Opus 4.6 is Anthropic's new flagship model and a direct upgrade to Opus 4.5. It is more capable at coding, reasoning, and long-context tasks.

The model is also better at deciding on its own when to think more deeply and when a quick response is enough, so users should observe faster answers on simple questions without losing quality on harder ones.

You can read more about the launch in the Anthropic’s official announcement.

Like its predecessor, Opus 4.5, the model is available in two versions: one with reasoning and one without.

The model is hosted in the EU and available to all workspaces now.

To get you started quickly, we have automatically enabled Opus 4.6 and Opus 4.6 Reasoning models in all workspaces where Opus 4.5 was previously activated. Admins can manage model access in workspace settings.

New Labels and Overview for Agents

We have redesigned the Agents page and added labels to Agents so that it is easier to discover, organise, and find the right agent for your requirements!

As teams expand and create more agents, it can be tricky to find the right one. We want to give you the tools to organise, filter, and surface the most relevant agents, whether you are looking for something you used yesterday or exploring what your team has built.

What's new

1. Improved Navigation

The agents page arranges agents into clear sections to help you find what you need faster:

- Highlighted agents are featured by admins at the top of the page

- Recently used agents are the ones you have interacted with most recently

- Recently shared are agents that have been shared with you

- Popular in your workspace are agents that are frequently used by your team

- All agents, which features your complete agent library

2. Advanced Filtering Options

We also added filters, with which you can narrow down agents effectively. The filtering options are:

- The creator of the agent

- The labels assigned to the agent

- The integrations used in the agent

- Who the agent has been shared with

- Whether the agent has been verified by admins

- The date the agent was created

You can, as before, search for agents using the search bar.

3. Agent Labels

You can now add up to 3 labels to your agents to help others discover them more easily. Choose from default labels like Marketing, Sales, HR, and Engineering, or custom labels created by your workspace admins. Labels make it simple to categorize and filter agents by team, use-case, or function. Labels can be added at the bottom in the agent configuration.

4. Highlighted Agents

Workspace admins can now highlight up to 4 agents for everyone in the workspace at the top of the agents page. This makes it easy to promote the most useful or frequently needed agents across your organization. Admins can click on three dots when hovering over an agent and apply the “Highlight”.

We can't wait to hear how you use labels and filtering to organize your agents! 🙌

Company Knowledge

We are excited to introduce Company Knowledge, a new way to search across all your connected data-management platforms directly from Langdock chat!

Your company’s knowledge may be spread across many tools like SharePoint, Google Drive, Outlook, and Teams. Langdock brings it together. With Company Knowledge, you can now search for information across all your connected platforms and get a single answer.

Why we built this

Since launching our integrations, teams have been connecting tools like SharePoint and Google Drive to build more capable agents. But we noticed that many of you were searching for information that lives across multiple systems, making it difficult to look for information from different sources. Instead of switching between apps or running separate searches, you can now query all your tools at once and get a synthesized answer with direct links to the source documents.

How to use Company Knowledge

Search from the chat

Click the "Company Knowledge" button in your chat input bar. Select the platforms you want to search, ask your question, and get a single answer pulled from across your integrations.

Watch it work

A timeline panel shows what Langdock is doing in real time: which searches are running, which documents are being read, and how it reaches its answer. You can simply collapse the panel if you just want the final response.

Go to the source

Every answer includes direct links to the original documents. Click through to verify information in SharePoint, Google Drive, or wherever the file lives.

Content-driven search

Company Knowledge does not just search for file names. It opens documents, examines the information, and decides whether the content is complete or whether a further search is needed. This means Langdock can intelligently follow leads across your file systems and discover relevant information that a simple keyword search would miss.

Permission aware

Company Knowledge respects your existing access controls. You only see results from documents you already have permission to view in each connected system.

Supported integrations

Workspace admins can configure exactly which integrations are available for Company Knowledge in the workspace settings.

You can search across:

- Google Drive

- OneDrive

- Sharepoint

- Confluence

- Gmail

- Outlook Email

- Slack

- Microsoft Teams

- Google Calendar

- Outlook Calendar

- Linear

Note: We cleared up the chat input bar with this release. You can find the buttons for deep research, canvas and web search by clicking on the "+" on the left of the chat input.

This is just the first version of this feature. We are actively working on additional integration support, and can't wait for your feedback and to hear how you use Company Knowledge! 🙌

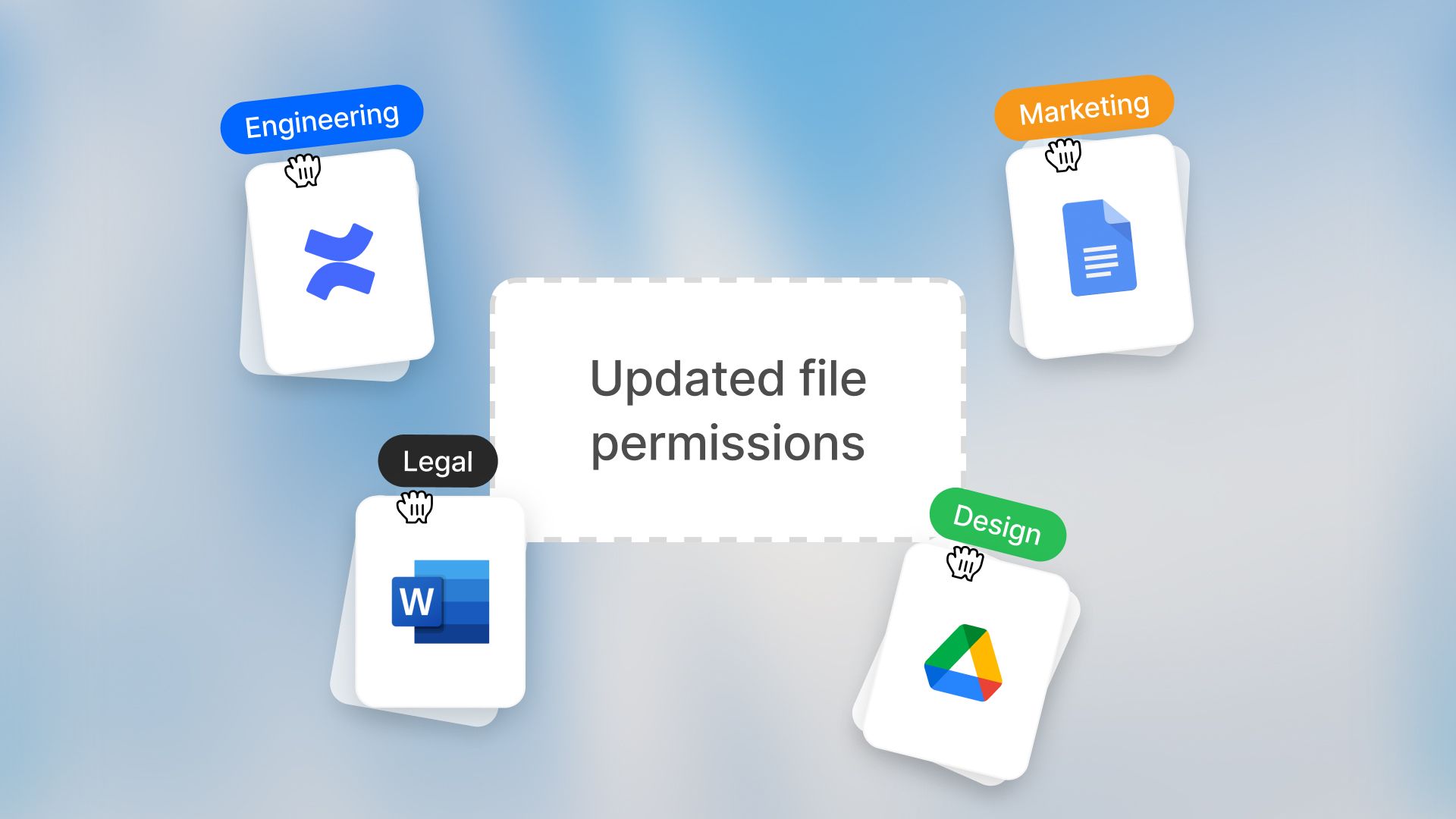

Updated File Permissions

We are making an important update to how permissions work when you attach folders or files from third-party tools (like Google Drive or SharePoint) to your agents.

Old permission behavior

Previously, when you attached a folder or file from a third-party tool to an agent and shared that agent with others, everyone with access to the agent could work with the synced data - even if they did not have permission to view those files in the source system.

This meant that there were two places where permissions to access the folder needed to be managed: in the source system (such as Google Drive or SharePoint) and in Langdock.

New permission behavior

Users will now only be able to access files and folders they have permission to view in the source system. If someone tries to use an agent but doesn't have access to certain files, they'll see a clear message explaining which files they can't access, and responses will only use the files they have permission to view.

Why this matters

We know this change may affect how some of your agents currently work. However, this change aligns folder and file syncing with how integrations in agent actions have always worked: Users need their own connection and can only access what they're allowed to in the source system. This makes permissions consistent across all Langdock integrations and ensures your company data stays secure.

What you should check

We’ve already notified users who created agents with attached data from an external source via email to prepare for this change. Here’s what we recommend for a smooth transition to the new permission behaviour:

- Review agents where you've attached folders or files from third-party tools

- Check who has access to those agents

- Ensure everyone who uses the agent has the right permissions in the source system

We’re looking forward to your feedback on this one! 🙌

- Improved chat sorting: Search results via "Search chats" in the left navigation bar and via the command bar are now sorted by recency for more relevant results.

- Descriptive chat titles: Chat and workflow titles are now more descriptive, shorter, and generated in the user’s primary language.

- Enhanced file search: Native search pickers now display parent folders and full hierarchical paths for better file identification.

- Deep research disabled after use: After deep research has been used, the button is now disabled to not trigger a new search immediately, since users prefer to send normal prompts to further work on the research outputs and process the findings.

- Hide group members: Group admins and workspace admins can now hide the member list of a group for its members for compliance reasons.

- Agent user feedback: Agent creators now receive notifications in their inbox when other users leave feedback on their agents.

- Import/Export agents and integrations: Users can now import/export agents and integrations via JSON files.

- Increased action output: The max action output has been increased from 150k to 200k characters.

- Saving @-actions in the prompt library: We added the ability to save @-actions in your saved prompts. This allows you to predefine which agent, knowledge folder, or integration you want to use.

- Send button for small screens: On small screens, pressing the enter key now creates a new line and does not send the prompt anymore. On large screens enter still sends the prompt.

- BYOK model replacement: BYOK admins now have the option to select a replacement model when removing custom models. All agents, chats, and workflows are automatically updated to the new model, which is especially helpful when sunsetting models in favor of newer models.

- New MCP version supported: We upgraded our MCP support to version 2025-11-25 to support additional MCP servers. Previous MCP servers are still supported.

- Automatic MCP server detection: When creating a new MCP integration, the server name and icon are now automatically fetched from the URL, without a manual entry needed.

Project Sharing

We are excited to introduce project sharing, an update that has been requested frequently since we launched projects in July! 🚀

Until now, projects have been a great way to organize your own chats and configure context through instructions and files for smaller projects. With project sharing, we are turning them into a powerful collaboration tool. You can now share entire projects, including all contained chats, files, and instructions, with your colleagues or entire groups.

Why Project Sharing?

Projects have always been great for organizing your own work. But we noticed that many of you were using them to structure work that is actually done collaboratively. Collaboration often happens in context, not just in single messages. When working on projects like a marketing campaign, a research topic, or a coding sprint, you often need to share the full picture, including files, instructions, and history. Instead of sharing individual chats one by one, you can now give your team access to the entire context of a project and allow others to build on top of your work.

Key Features

Flexible Sharing

You can now share a project with specific users or groups in your workspace. Everyone with access can view the chats, files, and custom instructions within that project. This is perfect for onboarding new team members to a topic or keeping everyone aligned.

Better Organization

To help you manage shared and private projects, we have updated the project’s sidebar and added filtering options to the project view. You can now easily filter chats created “By you" or "By others" to keep your workspace clutter-free.

Granular Permissions

You stay in control of your data. When sharing a project, you can manage access by choosing the roles of your collaborators. Editors can share the project with others, change the project title, update instructions, and manage attached files. They can also add new conversations to the project. Users have read-only access. They can see project configurations, read all chats, and view project contents. All conversations added to a shared project, whether existing or new, are visible to all project members.

Important: Regardless of your role, you are the only one who can change, rename, or delete your own chats, even if the project is deleted.

Project Sharing is available starting today. To share a project, open your project and click on the "Share" button at the top right.

We can’t wait for your feedback and to hear how you collaborate! 🙌

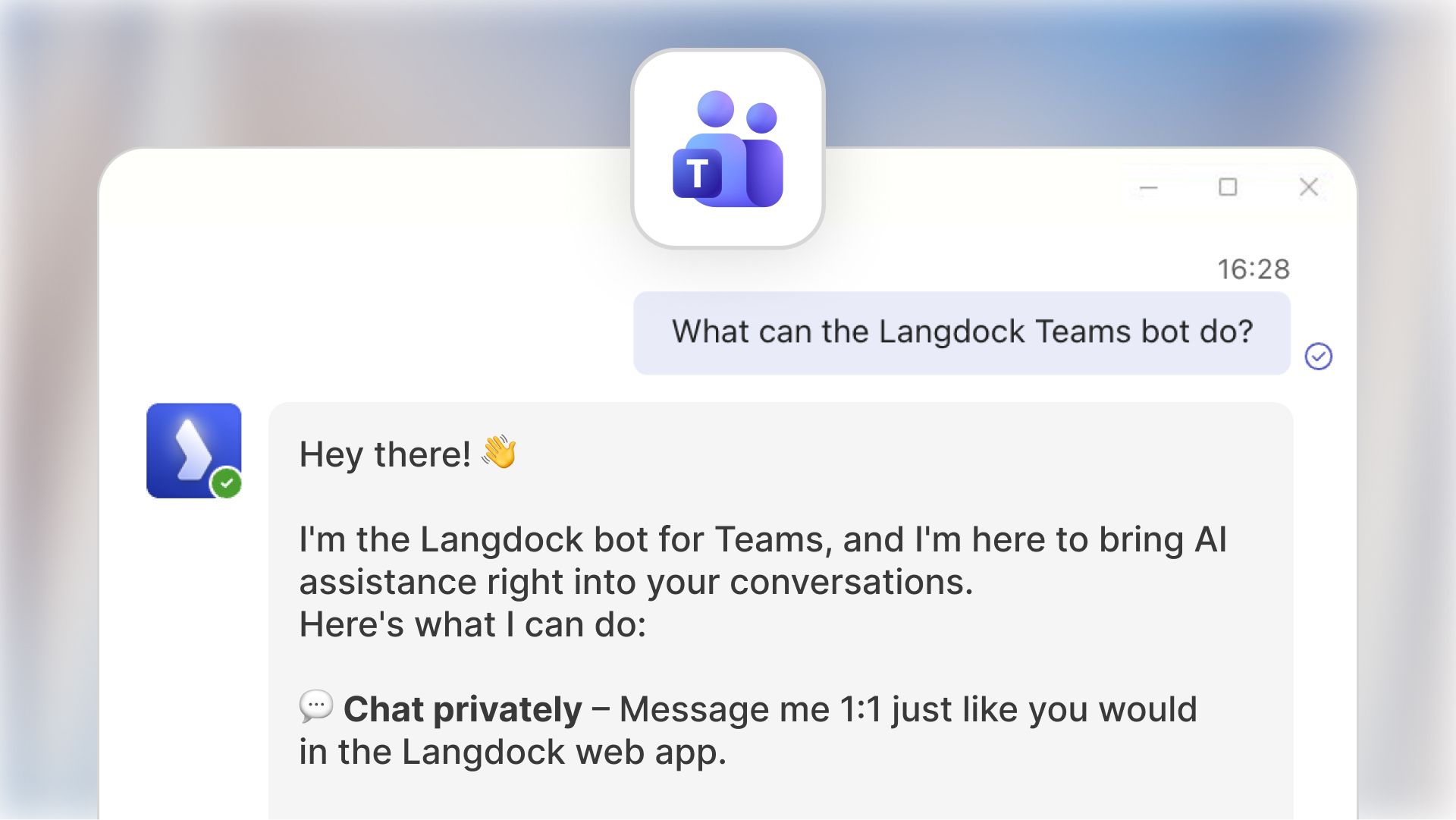

Microsoft Teams App

We are bringing Langdock even closer to where many of you collaborate every day by adding Langdock to Microsoft Teams 🤝

With the new Microsoft Teams App, your team can now use Langdock models and Agents directly inside Teams - in a private chat or collaboratively in channels. This especially helps with small ad-hoc situations every day. Example use cases are translating text really quickly or researching smaller topics without needing to open Langdock.

How to use the Teams App

Private conversations with Langdock App

Chat 1:1 with Langdock just like in the web app - full context, full capabilities, zero friction. Click on “New chat” and type “Langdock” to open a chat with the app.

Channel collaboration with @Langdock

Alternatively, you can pull Langdock into any channel you have (channels for a specific topic, indicated by a main message and thread with replies to this message). The app only reads the entire thread once mentioned with @, to help you with the current topic.

Working with the App in chats

You can also work with the app in chats you have with other users. Here, you can also tag the app with @ and then your prompt. The model can only reference this individual message, but not the entire chat.

Main Features

Selecting your default model

When pulling the app in your chat, you can either use the default model (e.g., GPT-5.1) or select a specific agent. The model is a good default for quick needs like explanations or smaller questions.

Agent selection inside Teams

Start a conversation with any Langdock Agent - from Translators to HR FAQ Agents - directly inside Teams.

File & image support

Upload documents or images in Teams messages - Langdock can read and analyze them just as it does in the web app.

Why we built the Teams App

Many teams use Microsoft Teams to collaborate and communicate with others. Many users have requested a way to use Langdock in Teams, as they already use the app several times a day. Teams becomes a natural extension of Langdock to help your team collaborate faster, and embed Agents into everyday workflows - from shared problem‑solving to specialized channel setups.

How to get started

Your workspace admin can install Langdock from the Teams Admin Center.

After installation, users can simply search for Langdock in the Teams apps.

If you want to dive deeper, check out the setup guide and usage guide.

We’re looking forward to your feedback on this one! 🙌

Assistants are now Agents

We're renaming Assistants to Agents across Langdock! This change reflects how the product has evolved and how the market and many of you talk about AI.

What's changing?

Only the name. Your existing assistants keep all their capabilities, configurations, and sharing settings. Everything works exactly as before.

Why Agents?

When we launched Assistants in the summer of 2023, the market was figuring out basic functionality, like how to let AI work with a few documents. Since then, the capabilities have grown significantly. What started as rather simple chatbots can now handle large file collections, work with tabular data, connect to your tools, and much more. They use agentic behavior to choose the right approach and tools for each task, working within the configuration you define.

The term "Agent" has become the industry standard for what we used to call Assistants. Many companies, such as Microsoft and Google Enterprise AI, use this terminology. More importantly, we've heard from many of you over the past months that users are used to the term "Agents" or even ask whether Langdock has agents available.

Why now?

This change comes with the launch of Workflows a few weeks ago. Agents and Workflows are complementary products: Agents are your interactive AI assistants for specific tasks, while Workflows let you chain multiple building blocks together (including multiple agents) to automate larger processes in the background.

What does this mean for you?

No action is required from you! The transition happened automatically, and we've updated all documentation to reflect the new terminology.

The assistant API remains unchanged by these changes for now. There will be a separate notification early next year to communicate the changes.

The Langdock platform now consists of the following products: Chat, Agents, Workflows, Integrations, and API. Renaming a core product isn't something we do lightly. We've thought carefully about this and talked to many of you. Ultimately, it made sense for us given how the product and market have evolved.

We'd love to hear your feedback on how we can continue to improve Agents for you! 🙌

- Support for Gemini 3 Pro: We added support for Gemini 3 Pro. The model required some additional configuration options to function properly. Currently, the model is only available as a global deployment and not yet as an EU-hosted model. BYOK customers can add the model in the model configuration. For customers who use models offered by Langdock, we added the model as a global deployment, and admins can enable it in the workspace settings.

- Deactivate workflow nodes: Workflow editors can now deactivate workflow nodes by clicking on the three dots at the top right.

- Building workflow triggers: Until now, you had to be allowed to build custom integrations and have workflow access to build triggers for integrations. Now, all users who are allowed to build integrations can build triggers. You can go to your custom integration and add the trigger like you would add an action.

- Updated MCP version: We upgraded our MCP support to version 2025-11-25 to support additional MCP servers. Previous MCP servers are still supported.

- Change order of image models: Admins of BYOK workspaces can now change the order of image models, like the order can be changed for other models.

- Agent Forms: Forms in agents had up to 15 fields until now. We increased the limit to 25 fields.

- API to create and update agents: We launched new API endpoints to create new agents and update existing agents via API. See our assistant API documentation here.

Workflow Improvements

Since launching Workflows a few weeks ago, our team has been working on a lot of improvements and performance upgrades for the product to make the experience smoother and more powerful. As a lot of users have requested, we have focused on making building, testing, and refining your workflows easier than before. Thank you again for all the feedback - see our launch video below!

Here is a list of all major updates:

New node: Loop

Enhance your workflows by automating repetitive actions. Loop nodes allow you to perform a selection of nodes multiple times before moving on to the next node. This helps with repetitive actions or iterating over a list of items.

New node: Generate image

Image generation now also works in workflows. This is perfect for visual automation and creative tasks if you regularly need to generate images. Simply select the ‘Generate Image’ node, and choose your preferred image model to get started!

Re‑run Failed Executions

If something doesn’t go to plan, you can now retry failed runs instantly without restarting the entire workflow.

Custom Webhook Responses

Workflows can now send real‑time replies to incoming requests. This is great for syncing with apps like Slack or notifying other systems instantly.

File Uploads via Webhooks

You can now send files directly into your workflows from external applications, opening up even more integration possibilities.

Sidebar Pinning

Just like you can pin your assistants to the sidebar, we have also implemented pinning for Workflows. Now, you can keep your favourite workflows just a click away!

Workflow Chat Context

You can now personalise the behaviour of your workflow chat by adding your favourite tools, such as Gmail or Outlook, HubSpot or Salesforce, directly as context to the Workflow builder. Simply open the chat and click ‘Set up’ to customise how workflows are built for you.

Customise Workflow Submission Forms

When creating a workflow form, you can now customise the submission experience. Adjust the colour, description, and name of the submission by unfolding the ‘Customisation’ section during form creation.

These improvements can help with simple daily tasks, like reminders or quick research, as well as more complex, multi-step processes. We can’t wait to hear what you think!

Improved PDF Processing

We've improved how we process PDFs to better handle visual content.

Until now, when you uploaded a PDF to Langdock, we extracted the text from it and sent it to the AI model, and created so-called “embeddings” to enable the model to perform a semantic search on the PDF. This allows the model to generate qualitative responses based on the uploaded file.

To further improve this processing, we now also take screenshots of pages and provide them to the model. In simple terms, this gives the model “eyes”, so you can now ask questions like “Describe the details of the graphic on page 26" and get accurate answers, since the model can now actually "see" the document content. The model now has a new “view page” tool for inspecting pages, which is particularly helpful for images, charts, logos, and other visual elements.

As always, let us know what you think! 🙌

- File size support increased: We increased the character limit for sizes from 4 million to 8 million characters.

- Project limit increased: We increased the limit on the number of projects that can be created from ten to 30.

- Voice input limit increased: We increased the limit of the voice input duration from five to ten minutes.

- Performance improvements: Our engineering team has deployed larger performance improvements over the last few weeks, resulting in faster page loading times and smoother answer generation.

- Model key pooling: Bring-your-own-key customers can now pool model deployments in separate regions. This helps increase rate limits and allows for another region to serve as a fallback in case the model encounters an error.

- Updated documentation: Over the last year, more features have been added to the product, and our documentation has grown accordingly. We cleaned up the structure, translated the documentation into German, and launched documentation for workflows. You can find the documentation here: https://docs.langdock.com/

Claude Opus 4.5

Anthropic’s Claude Opus 4.5 is now available in Langdock! 🚀

The new model introduces enhanced capabilities for coding, agentic workflows, and handling complex tasks.

Claude Opus 4.5 delivers improvements in reasoning, providing clearer answers with less technical jargon.

You can learn more about the model in Anthropic’s official announcement.

We have automatically enabled Opus 4.5 in all workspaces where Claude Sonnet 4.5 was previously activated.

Like Claude Sonnet 4.5, the model is available in two versions: one with reasoning and one without. This allows you to choose whether you want the model to reason for your current request.

New GPT-5 & 5.1 Versions

We upgraded GPT-5 and GPT-5.1 to the latest model versions from OpenAI, now featuring a dedicated "Chat" version optimized for conversational interactions.

The new Chat version delivers responses with richer formatting: better structure, clearer lists, and more polished outputs that make conversations easier to follow and act on. These are the same model versions currently used in ChatGPT.

Both GPT-5 and GPT-5.1 "Chat" versions are hosted in the EU. The previous GPT-5 and GPT-5.1 models have been updated and are now live. If you haven't tried out the latest GPT-5 series yet, give it a try!

GPT-5.1

We are excited to announce that GPT-5.1 is now available in Langdock! 🚀

As of today, we are sharing the newest GPT-5 series release: GPT-5.1, available also in thinking mode. This model improves on both intelligence and communication style.

This model is more intelligent, warmer, and better at following instructions than GPT-5. You may notice a more playful tone, paired with well‑structured outputs.

This model is a great choice when you want it to closely follow your guidance and produce polished responses. It has become faster at solving simple tasks, while being more persistent on complex ones. The model generates clearer responses by focusing on less technical jargon.

You can read more about the launch in the announcement from OpenAI.

Like the previous GPT-5 model, the model is available in two modes - one for fast responses (GPT-5.1) and one for reasoning before responding (GPT-5.1 Thinking).

The model is hosted by Microsoft Azure inside the EU and available to all workspaces now.

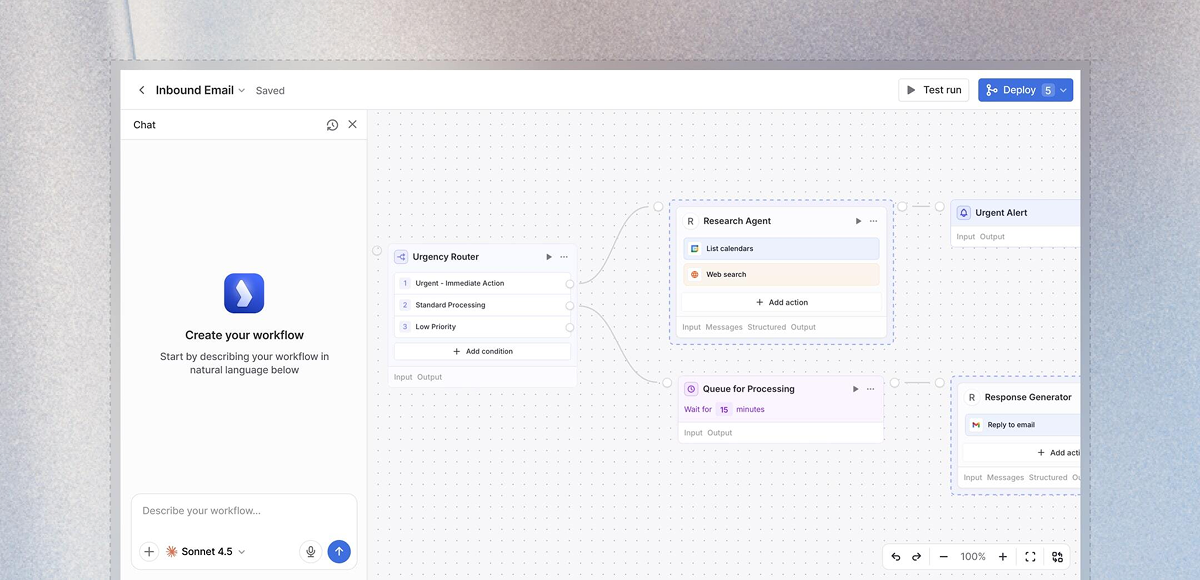

Workflows

We're excited to launch Workflows, a powerful new way to build AI-driven automations in Langdock! 🚀

Workflows bring together everything you already use in Langdock - Chat, Assistants, and Integrations - into end-to-end automations. Think of it as your orchestration layer where you can chain multiple steps together, add conditional logic, loop through data, and create sophisticated processes that run automatically.

What are Workflows?

Workflows allow you to build complex, multi-step automations without writing code. They can be triggered by a form submission, a schedule, or an event in your connected apps and handle entire processes from start to finish-automatically and reliably.

Key capabilities:

- Multi-step automation: Chain assistants, integrations, and custom logic into sophisticated workflows.

- Flexible triggers: Start workflows manually, on a schedule, via webhook, or through form submissions.

- Conditional logic: Add deterministic if/then branches, loops, and decision trees to handle complex scenarios.

- Human-in-the-loop: Include manual approval steps when needed for critical decisions.

- AI at every step: Use different AI models throughout your workflow to analyze, decide, and adapt.

- Cost management: Monitor and control workflow spending with built-in cost limits and usage tracking.

Why Workflows?

More powerful than Chat: While Chat is perfect for interactive conversations, Workflows automate entire processes in the background. Set them up once, and they run reliably 24/7.

More flexible than Assistants: Assistants are great for specific tasks, but Workflows let you combine multiple assistants, add custom logic, integrate with external APIs, and create sophisticated decision trees.

More than Integrations: Integrations connect your apps, but Workflows orchestrate complex processes across those apps-with AI at every step.

Example use cases

- Automatically analyze and route customer support tickets based on content and urgency.

- Generate weekly reports by pulling data from multiple sources, analyzing trends, and distributing results.

- Process form submissions with AI review, conditional approval flows, and automated follow-ups.

- Monitor data sources on a schedule and trigger actions when specific conditions are met.

Getting started

Workflows are available now in Langdock! To start building:

- Workflows must be activated by an admin in workspace settings.

- Navigate to the Workflows section in your sidebar.

- Create your first workflow using our visual builder.

- Choose a trigger, add nodes, and connect your logic.

Important notes:

- AI usage in workflows is billed via API pricing, and depending on the number of runs, you might need a subscription upgrade (pricing details here).

- Set cost limits and enable monitoring to control spending.

- All workflow executions are logged to the workflow creator for transparency and debugging.

We're excited to see what you build with Workflows! As always, we look forward to your feedback. 🙌