Changelog

We're constantly working on new features and improvements. Here's what's new with Langdock.

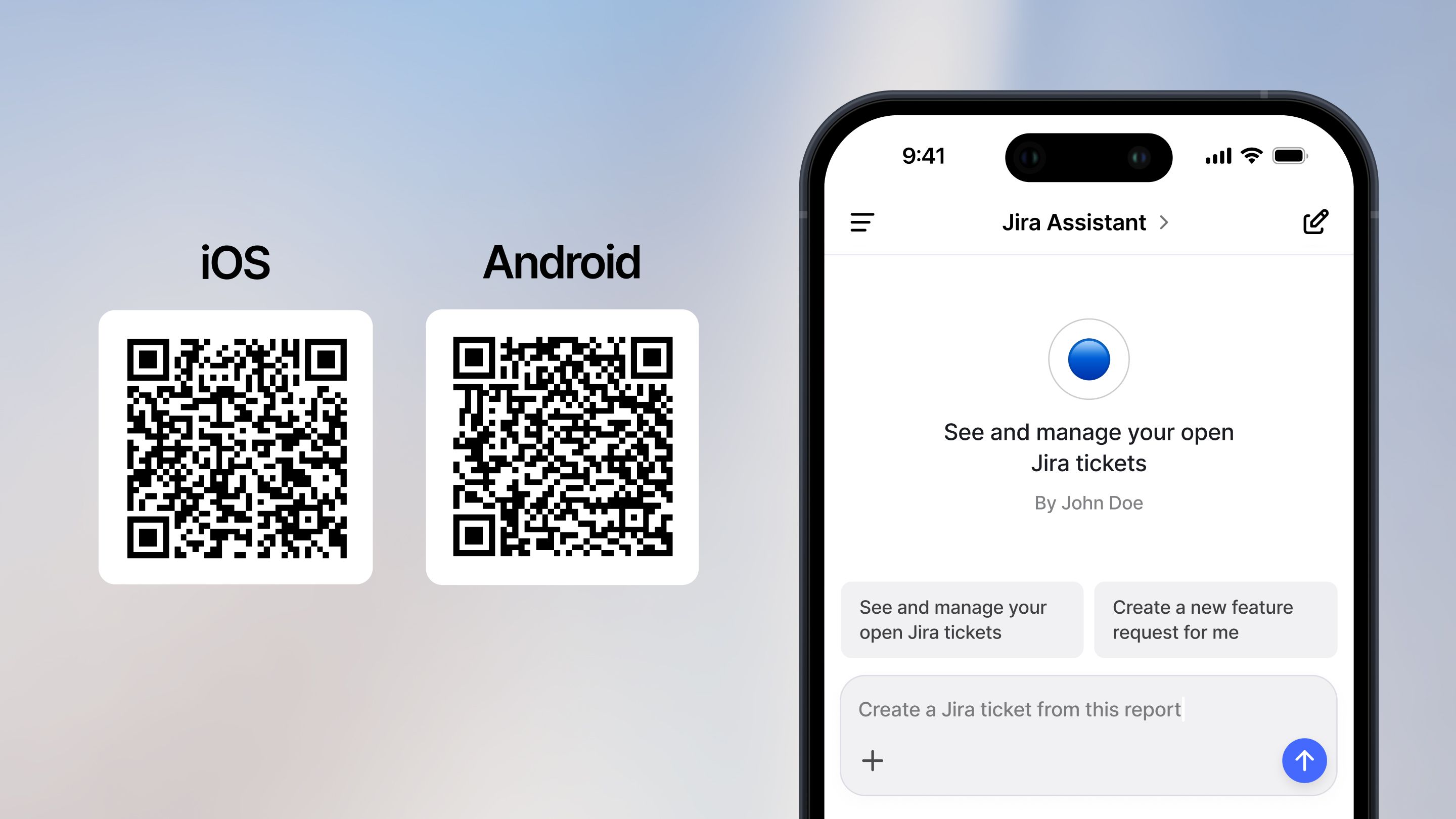

Android and iOS App

Today, we are excited to launch the Langdock mobile app! You can now download Langdock as a dedicated Android or iOS app and use AI from your phone.

You can now use Langdock wherever you are. You can choose between your models, use the chat and your assistants.

Additionally, we added one highly requested new functionality: You can now use your voice as input, Langdock will transcribe it, and submit it as a prompt. Look out for the microphone icon in the prompt input field!

To download the app, go to the Apple App Store here or the Google Play Store here.

- Voice input in the browser: Next to having voice input in the mobile app, you can now also use this functionality when you use Langdock in the browser.

- Assistant management: Admins now have an improved way of managing assistants in the workspace. Admins can verify certain assistants to highlight them in the assistant list and re-assign the ownership of an assistant if the previous owner left the company. See workspace settings.

- New integrations: We added new integrations including Salesforce, GitHub, Slack, Airtable, Zendesk, Snowflake and DeepL.

- Increased character limit for text files: We increased the limit for text files from 2M to 4M characters.

- Admin mode for integrations: Admins can now test all integrations before they enable them for the entire workspace. Access to integrations can be managed in the workspace settings.

New GPT-4o version

We've integrated a new version of GPT-4o into our platform! 🚀

This powerful version delivers improved response quality and faster generation times.

The model was previously available as "GPT-4o (latest)". We merged the two models now and the normal "GPT-4o" is the newer model.

The GPT-4o image generation capability announced a few days ago is not available in a version hosted on EU servers yet. We will add it as soon as it becomes available in the EU.

We've also made several improvements to enhance your experience:

- Text formatting preservation: When manually copying parts of responses in chat and assistants the formatting is now being kept, as with the copy message button

- Native Mermaid diagram support: Create your own mermaid diagrams directly in our chat. These can be Flowcharts, Sequence diagrams and many more

- o3 mini via API: OpenAI's o3 mini reasoning model is now available via our API

- Table formatting: We improved the formatting of tables in the chat. This includes the appearance, the copying behavior and the ability to download a CSV of generated tables

New Integrations

We launched our new integrations! It’s much easier to integrate other software tools into Langdock now to retrieve data and take actions. The update consists of three main parts:

- 20+ native integrations are now available in Langdock

- An easier way to build integrations for your own tools

- Improvements to existing integrations and knowledge folders

New integrations and actions

We made integrating external tools into your assistants easier and pre-built many new integrations for the tools our customers use. For example, you can now use the following integrations: Jira, HubSpot, Google Sheets, Excel, Outlook, Google Calendar, and Google Mail.

You can now easily add actions that your assistants can perform. Example actions are:

- Write email drafts and send them to Google Mail or Outlook

- Create or update deals in HubSpot

- Write and update tickets in Jira

- Add an entry to a Google Sheet or an Excel Sheet

- Send a message in a Microsoft Teams chat

- And many more...

Here are more details on how to use them. If you’re missing an integration or specific action, please let us know!

Integrate your own tools

The Langdock team will build integrations to all standard software tools in the coming weeks. If we don’t have an integration (yet), or you want to integrate an internal tool, you can build your own integrations.

We deprecated the previous OpenAPI-schema-based integrations in favor of a simpler integration builder that also allows you to write custom JavaScript to cover all kinds of edge cases. The integrations/actions now live outside of assistants, so you can share and reuse them in multiple assistants. You can follow this guide to set up your own REST API based integrations.

Improvements to existing integrations and knowledge sources

We also improved the interface and experience of existing integrations. Here are the most significant changes:

- When you attach a document from an integration (e.g., SharePoint or Google Drive) as assistant knowledge, we now refresh the content of the document every 24 hours. This ensures that you always work with the latest version of the document in your Langdock assistant. You can also manually refresh a document at any time.

- Knowledge folders can now be shared with users, groups, and the workspace (similar to assistants). The knowledge folders moved from the account settings into the integrations menu to make them more visible.

- If you already built custom actions in an assistant, they are still available. We marked them as read-only, and they will be deprecated on April 30th. We recommend migrating your existing actions to our new, improved actions. If your action is not available out of the box yet, let us know if you need help migrating it.

- Vector databases were also moved from individual assistants to the integrations menu to make it easier to reuse connections. Assistants with existing vector databases were migrated accordingly to ensure they worked as before.

Additional information for workspace admins:

- By default, all integrations are enabled. You can configure which integrations should be enabled in your workspace here.

- Workspace-wide integrations (Google Drive & Confluence via service accounts) are now deprecated in favor of the new integrations. Please let your users know so they can configure the integrations manually. The functionalities and permissions are covered completely by the new integrations.

- The permissions per user role have changed to reflect the new integration framework: The permissions “Connect Vector Databases” and “Connect Actions” were deprecated, and the new permissions are “Share knowledge folder” and “Create integrations.”

This new integration framework will allow for many more use cases in Langdock, and it’s just the beginning. In the coming weeks, we’ll add many more functionalities to work with all kinds of data in Langdock. Stay tuned!

Platform Speed Improvements

We just shipped massive speed improvements across our platform! While we are continuously working on model speed, you'll notice everything else is running much faster now. Plus, we released some much-requested improvements on our chat input and API.

- Embedding Models in API: The OpenAI ada-002 embedding model is now available through our API and can be used for personalizing, recommending, and searching content

- Knowledge Folders + Assistants API: Knowledge folders are now fully compatible with the assistants API for seamless integration

- Character Count Indicator: The text input field now provides visual feedback, which shows the character count and turns red when exceeding limits

Claude 3.7 Sonnet, o3 Mini and Gemini 2.0

We've just added three powerful new models to Langdock: Claude 3.7 Sonnet, OpenAI's o3 Mini and Gemini 2.0 Flash.

Claude 3.7 Sonnet

Claude 3.7 Sonnet is the successor to 3.5, one of the most used models in our user base. The previous 3.5 version is used already by many users for writing tasks, such as emails or translations and for coding.

The upgrade of the new model version is a dual-mode capability, which were added as two separate models

- The normal mode allows users to either use it as a regular LLM and immediately create an answer for simpler tasks (like email generation or translating a text).

- The reasoning mode allows the model to self-reflect before answering, to provide a better, deeper answer for complex problems (like strategy, maths or science).

We have added the modes as two separate models (Claude 3.7 Sonnet and Claude 3.7 Reasoning).

o3 Mini

OpenAI's o3 Mini is the latest and most-efficient model of OpenAI's reasoning series.

Reasoning models, like o3 Mini, o1, r1 from DeepSeek or the Claude 3.7 Sonnet model mentioned above use chain-of-thought thinking to split a task into several steps. This makes them useful for complex tasks, like maths, physics, complex instructions, coding or complex strategic tasks.

o3 Mini is the latest and most efficient model of OpenAl's reasoning series. o1 is the broader knowledge reasoning model, and o3-mini is faster compared to the previous one, balancing speed and accuracy. As o3 Mini allows for control over its reasoning efforts, we have added the standard mode as well as a high-effort reasoning mode as two separate models (o3 Mini and o3 Mini High).

Gemini 2.0 Flash

We also added the new Gemini 2.0 Flash model, which is now available in the EU as well. The Flash model from the previous 1.5 Gemini generation was the faster, smaller model compared to the larger and more advanced Gemini 1.5 Pro. The new Gemini 2.0 Flash outperforms Gemini 1.5 Pro on key benchmarks and is twice as fast.

Assistant Forms

We are bringing a new way to interact with assistants in Langdock: Assistant forms. When building an assistant, editors can now choose to use the new form input method, where they can define the input fields shown to users.

You can build an interface to structure the inputs users need to enter to receive high-quality results, similar to survey forms. When users use an assistant using the new input method, they will be presented with the form the editor built. You can use inputs you know from other tools, like:

- Single-line text

- Checkboxes

- File upload

- Single-select options

- Number

- Date

This gives assistant creators more flexibility when creating assistants and allows them to tailor the input structure to your specific needs, while making it easier for other users to use the assistant.

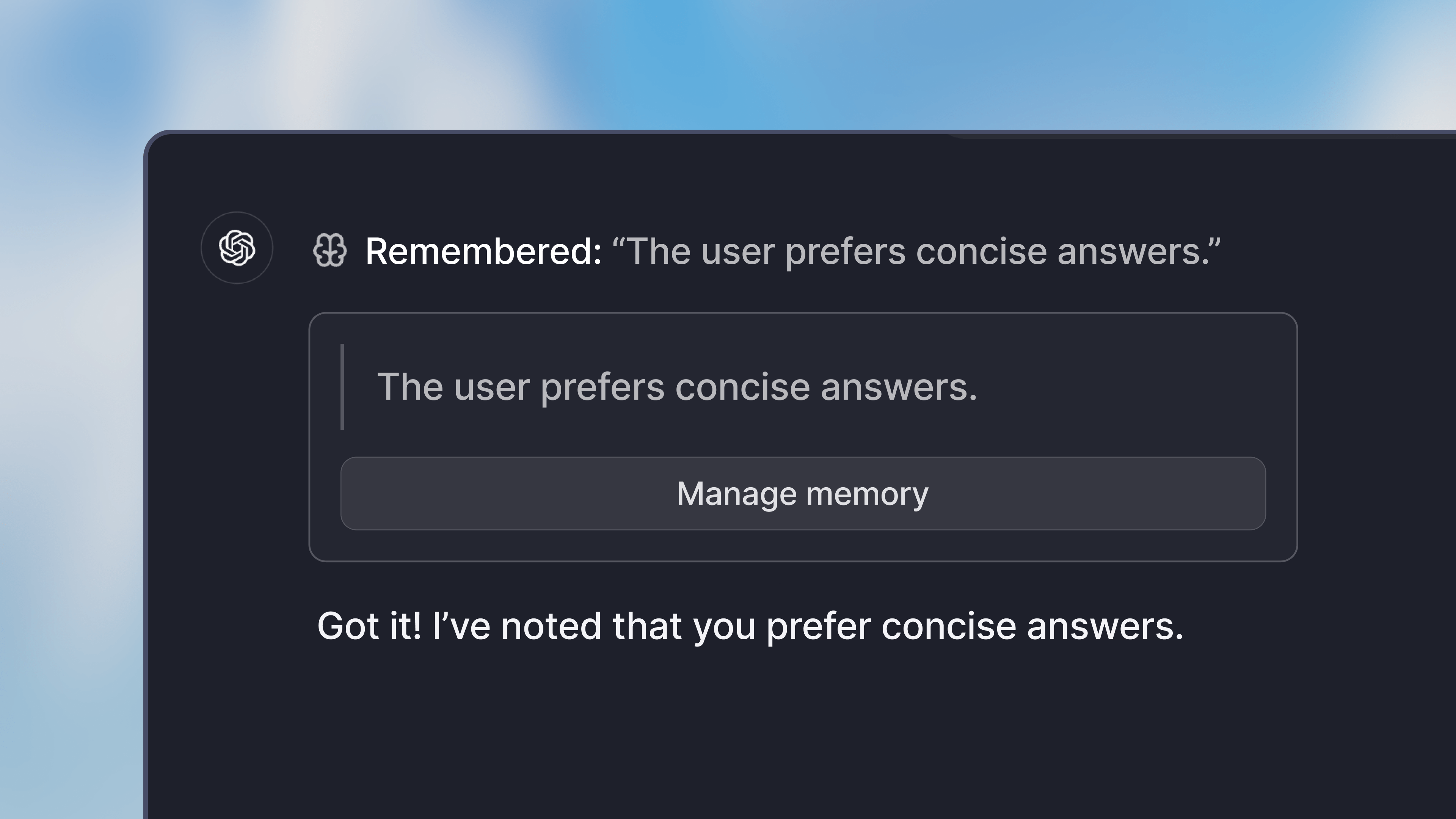

Memory

Memory offers deeper personal customization of the model responses, by saving information from past interactions in the application.

When using memory, you can tell the model to remember certain information about you, your work or any preferences you have. It will then save the information in the application. For example, you could have it:

- Remember certain details about your job

- Share a preference for a specific style of writing

- Remember your name and other personal details

By default, Memory is disabled. To use it, head over to the preferences in your settings. There you can enable chat memory in the capabilities section.

All memories are stored in your account, and are available to you in all your chats (not assistant chats). They are not accessible by others in your workspace.

- OpenAI o3 mini: We added support for the new OpenAI o3 mini model. Admins can configure it in the settings. We consume the model from Microsoft Azure and it is available as a global deployment.

- Increasing password security requirements: We increased the minimum number of characters a password needs to have. We recommend using a password manager, the magic email link login or login through SSO.

- Langfuse integration: We added a Langfuse integration, which allows technical users to assess the performance of assistants.

- Prompt variables: We added support for using the same input variable several times in the prompt. You fill out one variable and it gets copied to all occurrences of the same variable.

DeepSeek-R1

We've added support for the new R1 model from the Chinese AI company DeepSeek. R1 has been receiving a lot of attention in the media recently for its strong performance. The model rivals OpenAI's o1-series and is open-sourced for commercial use.

The R1 model is available in multiple versions. We are self-hosting the 32B version of the model on our own servers in the EU and consume the full 671B version from Microsoft Azure in the US. Since the model is still early and focuses on reasoning, we have deactivated tools like document upload, web search and data analysis for now.

Admins can enable the models in the settings.

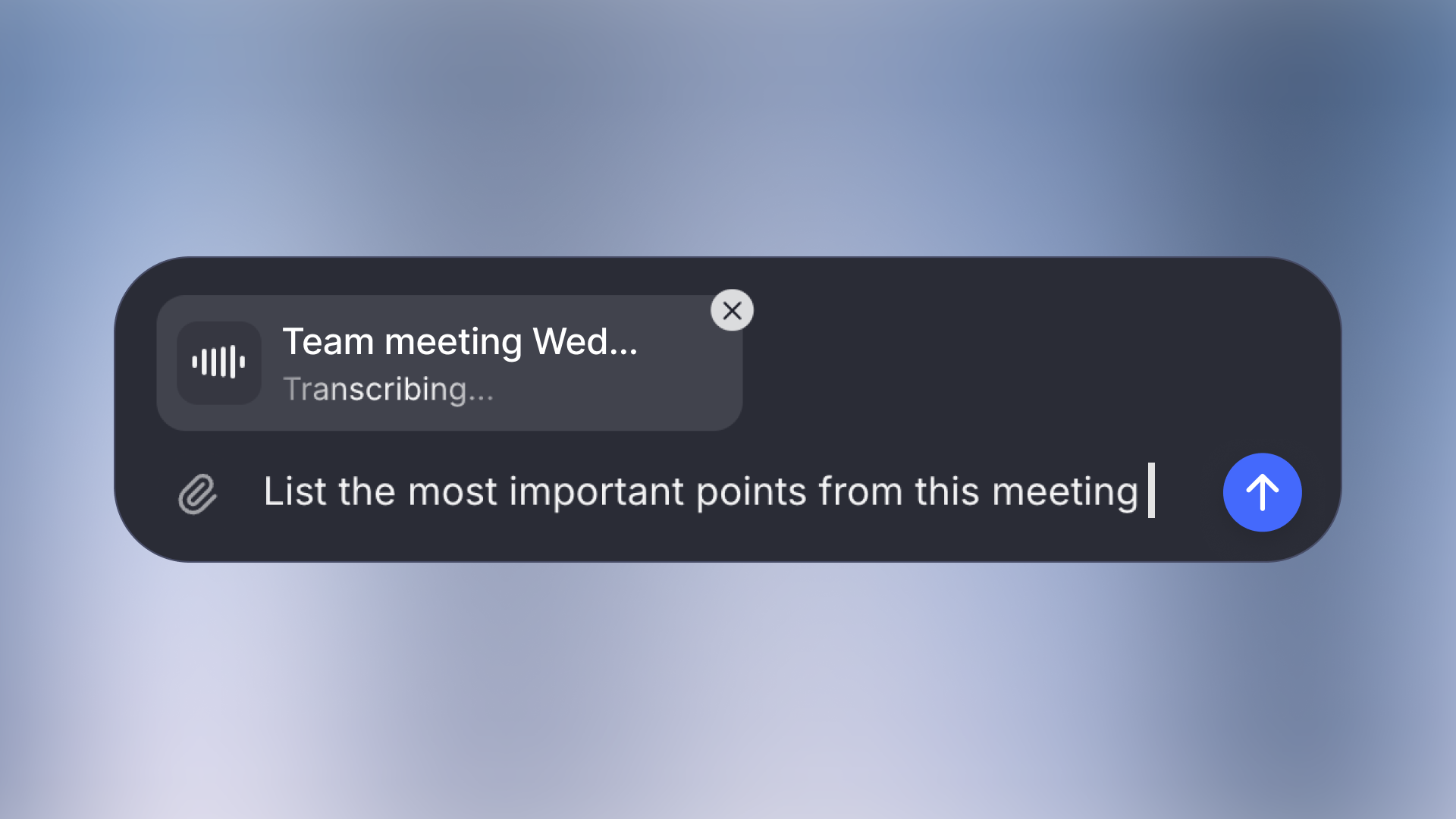

Audio & video upload in chat

We're excited to announce that you can now work with audio and video files in the chat.

Upload your recordings (up to 200MB) and our system will automatically transcribe them, allowing you to have natural conversations about the content.

You can work with all common formats including MP4, MP3, WAV, and MPEG files. Whether you need to review a team meeting, analyze a client call, or process a voice memo, simply upload your file and start asking questions about its content.

- Llama 3.3 model: We added the newer Llama 3.3 70B model to the platform

- OpenAI o1: We added the o1 model from OpenAI to the platform. However, it is only available as a global deployment, which means that servers could potentially be outside of the EU. The model is turned off by default, but admins can activate it in the settings.

- Amazon Nova models: We added the Nova models from Amazon in the model settings. However, they are only available in the US at this point.

- Gemini as backbone model: Admins can set up Gemini models as a backbone model now. The backbone model defines tasks in the background for some models.

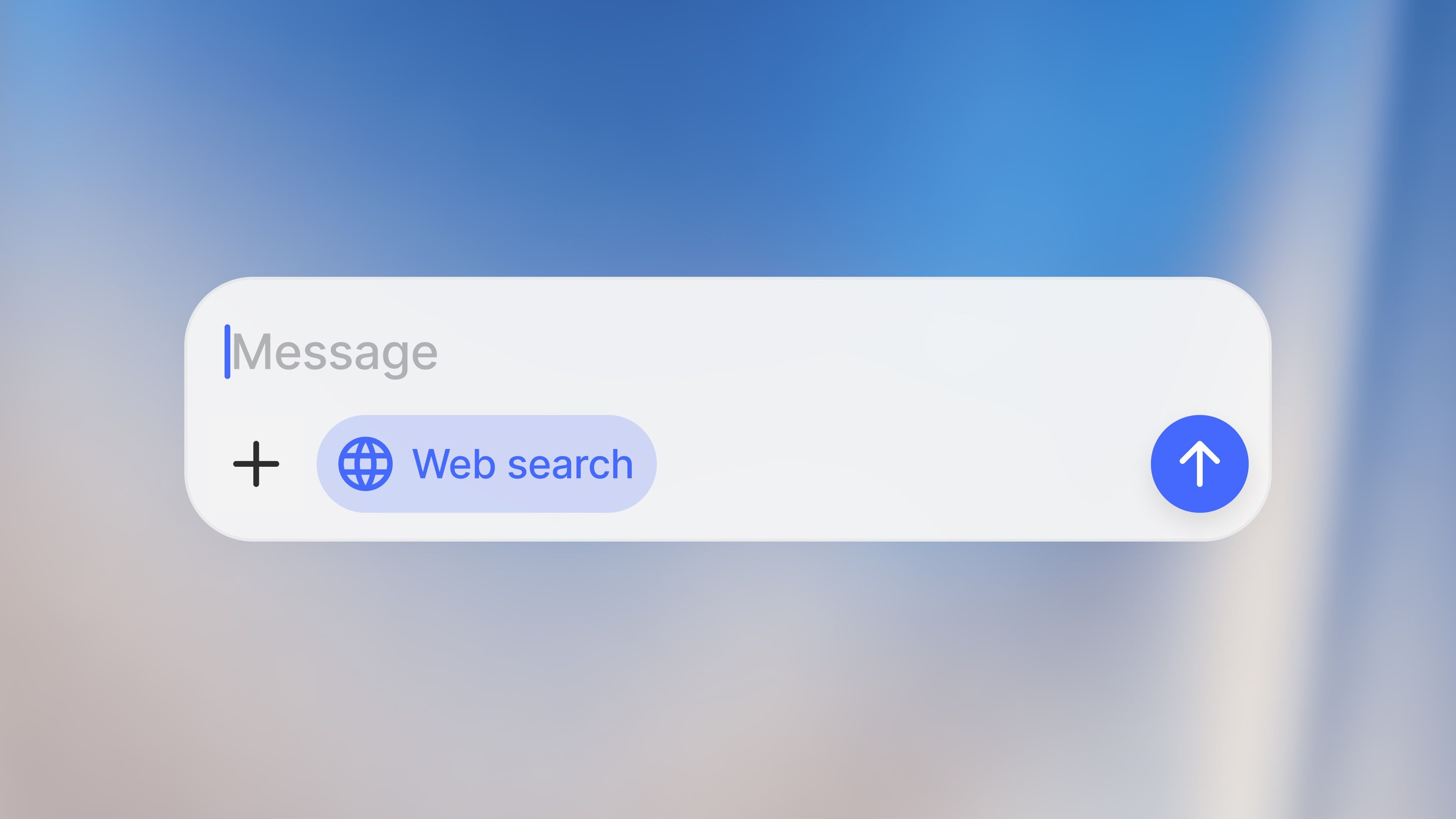

Web search mode

Langdock now offers an enhanced web search mode, providing quick and current answers along with links to relevant internal sources from the internet.

If web search is enabled for your workspace, you can now turn it on in the newly redesigned chat input bar. This will force the model to search the web for up-to-date news and information regarding your query.

- Sidebar resizing: You can now resize the sidebar to your preference.

- Response copying: Users can now copy responses while they are still generating.

- Spending limits in API: We now support setting spending limits for API usage in the workspace settings.

- Prompt library: Adjusted prompt library layout to show more content from the saved prompts for a better experience.

- Long chat performance: We optimized the rendering of chat messages, resulting in smoother performance in bigger chats.

- Data Analyst: We've made some larger improvements to the Data Analyst, making it more reliable.

- Upload of Python, JS, HTML, CSS, PHP: You can now upload more file types to Langdock.

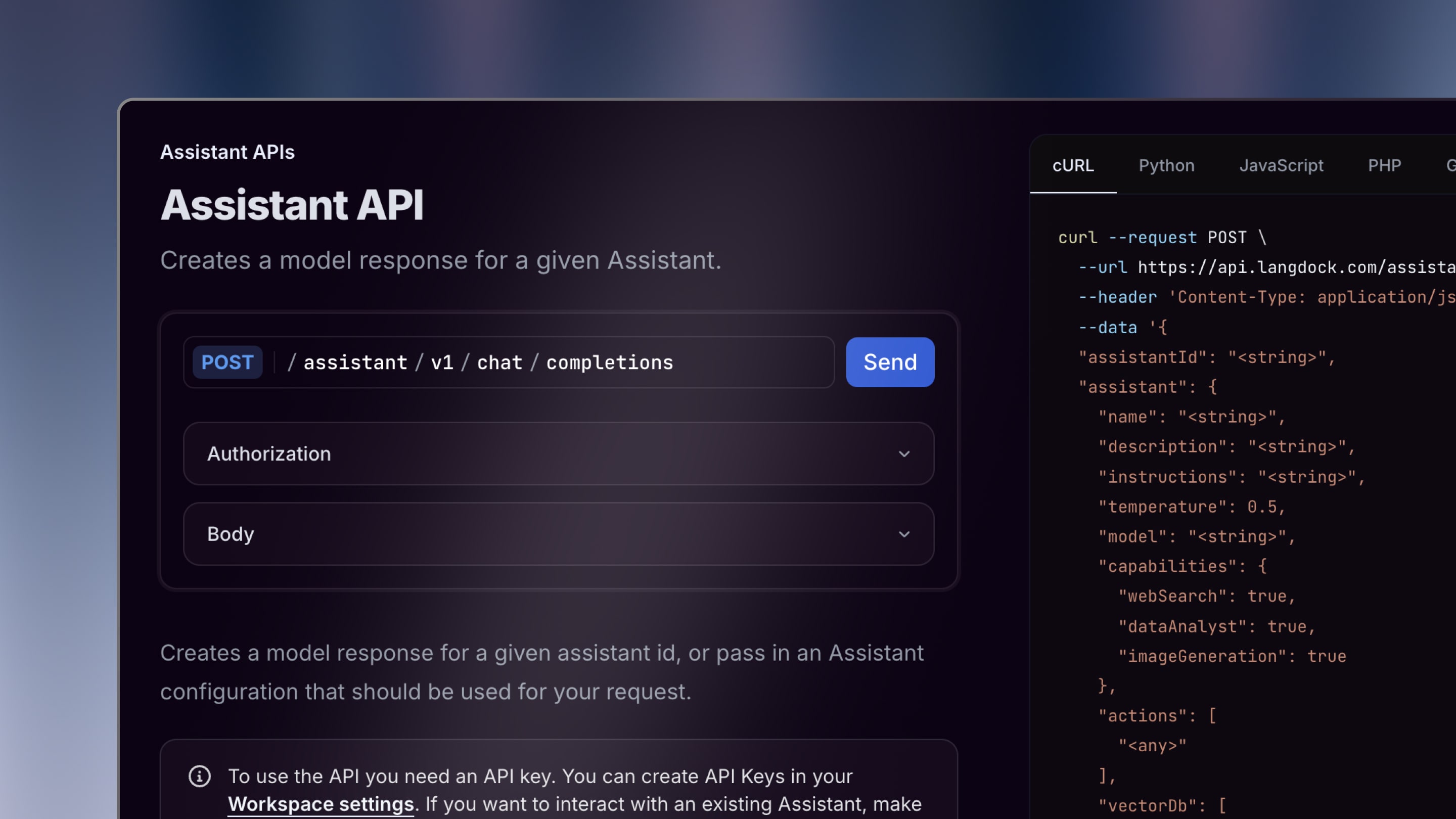

Assistant API

We launched the API for assistants. You can now access assistants, including attached knowledge and connected tools through an API.

To enable an assistant to be accessible through the API, admins need to create an API key in the API settings. Afterward, you can share the assistant with the API by inviting the key like a normal workspace member.

After configuring the API in your workflow (here are our docs), you can then send messages to the assistant through the API. The API also includes structured output and document upload.

- Formatting of copied output: We improved the formatting of output when you copy a response into a different tool.

- Share chats: When a user clicks on 'create link' in the assistant sharing menu, the URL is automatically copied into the clipboard.

- Data analyst: We improved the data analyst and the handling of CSVs, PDFs and Excel Files

- Pinecone as Vector Database: We added support for Pinecone as a vector database.

Command Bar (Cmd+K)

You can now navigate Langdock and search chats directly from your keyboard with the new command bar feature. This allows for quick and easy access to the information you need, right at your fingertips.

Pressing Cmd + K on your keyboard (for Windows Ctrl + K) opens a menu to quickly perform different operations. Here are a few examples:

- Search through all your chats

- Search a specific assistant

- Quickly change settings, like switching to dark mode, opening the documentation, the changelog, or the support chat

We also added a search button in the top left corner which opens the command bar.

There are also new and updated shortcuts:

- Open a new chat: Cmd/Ctrl + Shift + O

- Open/close the sidebar: Cmd/Ctrl + Shift + S

- Copy the last response: Cmd/Ctrl + Shift + C

We hope these improvements make you even more productive when using Langdock.

- Long text in variables: The display of long text in variables has been improved.

- Actions improvement: Added support for multiple headers in actions.

- Redirect from sharing assistant page: When sharing an assistant, users were redirected to the assistant overview. We improved the behavior, so users are not redirected and stay in the assistant editor.

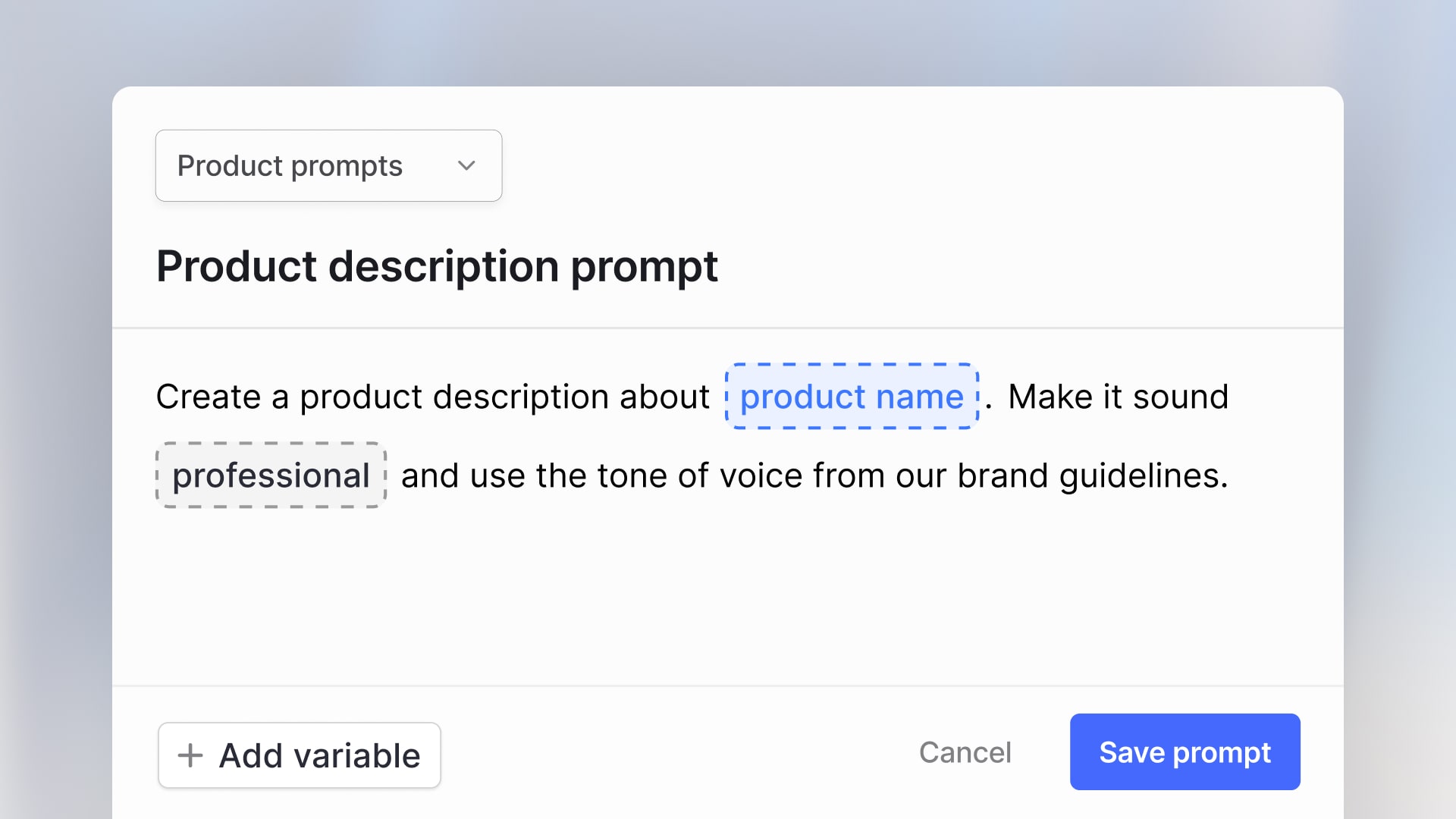

Prompt Variables

You can now incorporate variables directly into your prompts and create dynamic templates that can be easily reused across different contexts.

When creating a new prompt in the prompt library, wrap a word with {{ and }} or click on the variable button at the bottom to make it a variable. When using the prompt later, users can quickly fill out the variables in order to customize the prompt to their needs.

This helps to easily use a prompt in different contexts without leaving your keyboard or to make it easier for others to use the prompt when you share it.

You can find more details in our section about the prompt library in our documentation.

- Web search: Improved web search speed and display of sources.

- Claude 3.5 Sonnet: Claude 3.5 Sonnet tended to go to the web more often than other models. We improved this behavior.

- Assistant feedback: This improvement allows users to submit feedback for assistants without free-text inputs.

- API improvement: We made our API compatible with n8n.

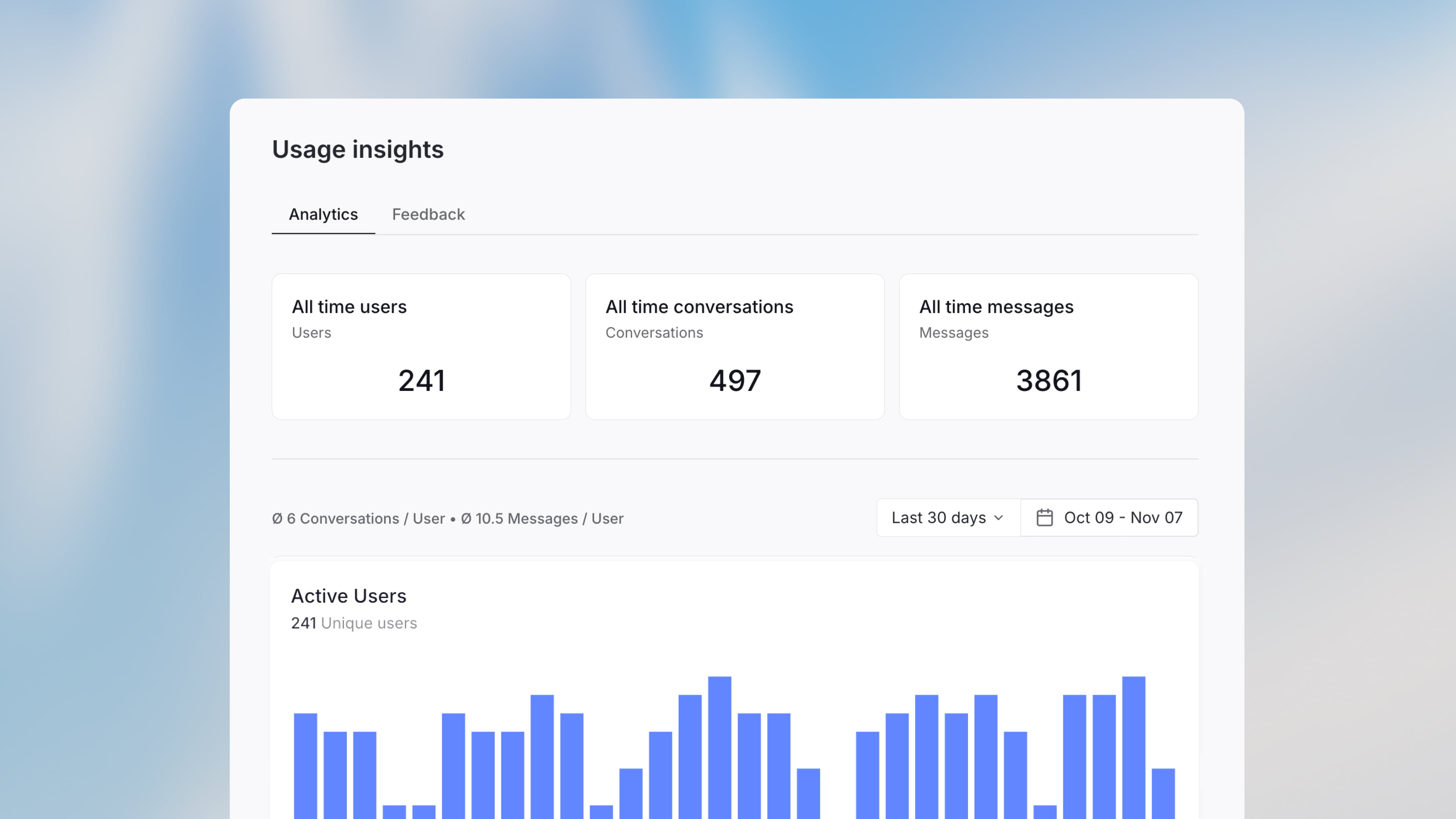

Assistant Analytics

Gain valuable insights into how your assistants are being used with the new assistant usage insights feature available in Langdock.

Users can now upvote or downvote responses and leave comments, providing direct feedback that can help you improve your assistant's configuration. This interaction enhances the user experience and offers concrete suggestions for improvement in the feedback tab.

In the analytics tab, you as an editor or owner of an assistant, can access quantitative data about usage over specific timeframes in the analytics tab. The number of messages, conversations and users helps you understand user engagement and identify needs.

With these insights, assistant creators can assess performance and make informed improvements of the configuration, leading to a more effective and user-friendly assistant.

- Smaller improvements to canvas: We improved the performance and responsiveness of canvas on mobile, the streaming animation runs smoother now and the model with canvas triggers the feature more reliably.

- New file format: We added support for .eml files.

- Resize split screen: The Canvas split screen and the assistant split screen can now be resized horizontally by dragging the border in the middle.

- Increased image file size: You can now upload images up to 20MB.